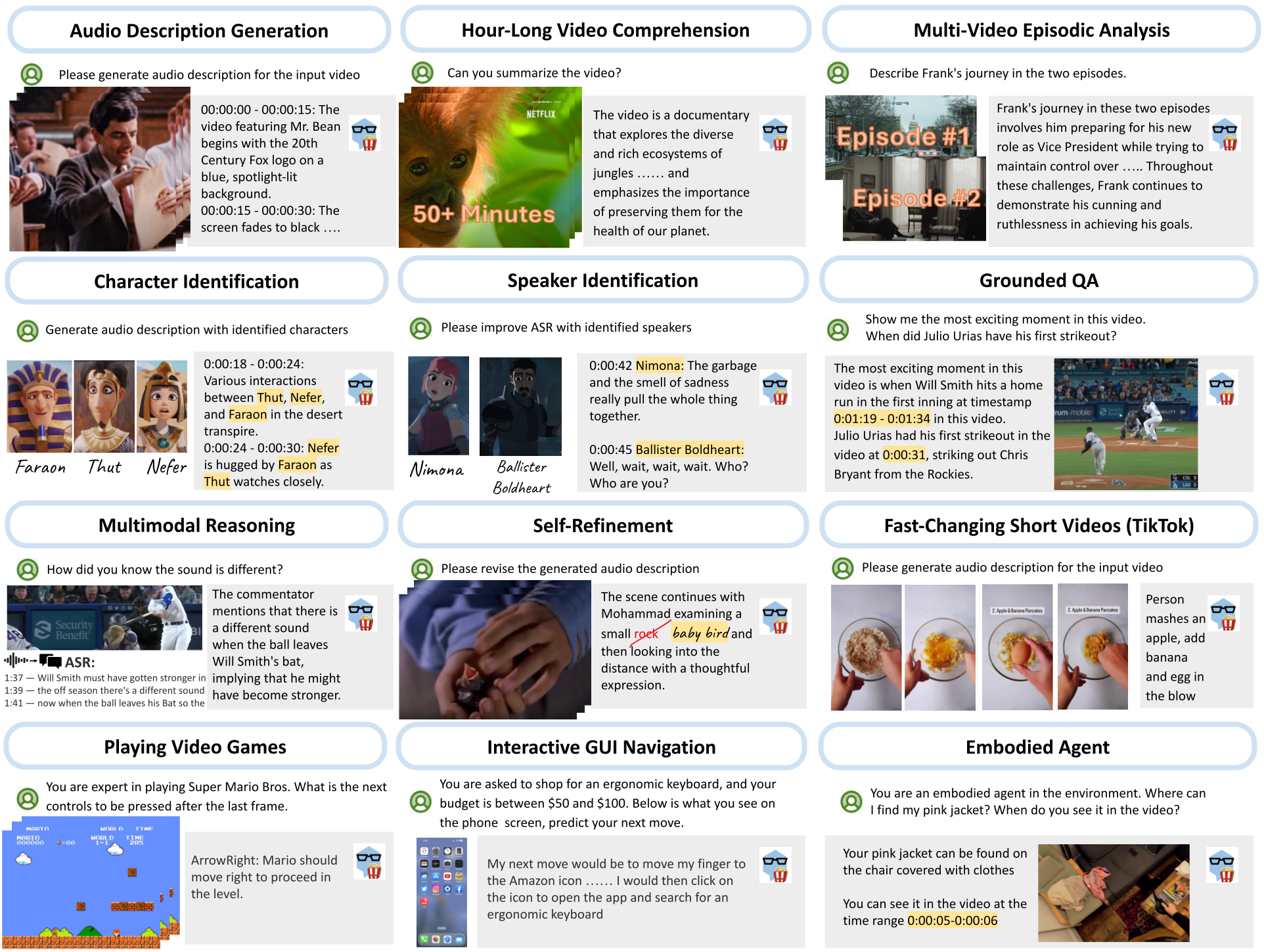

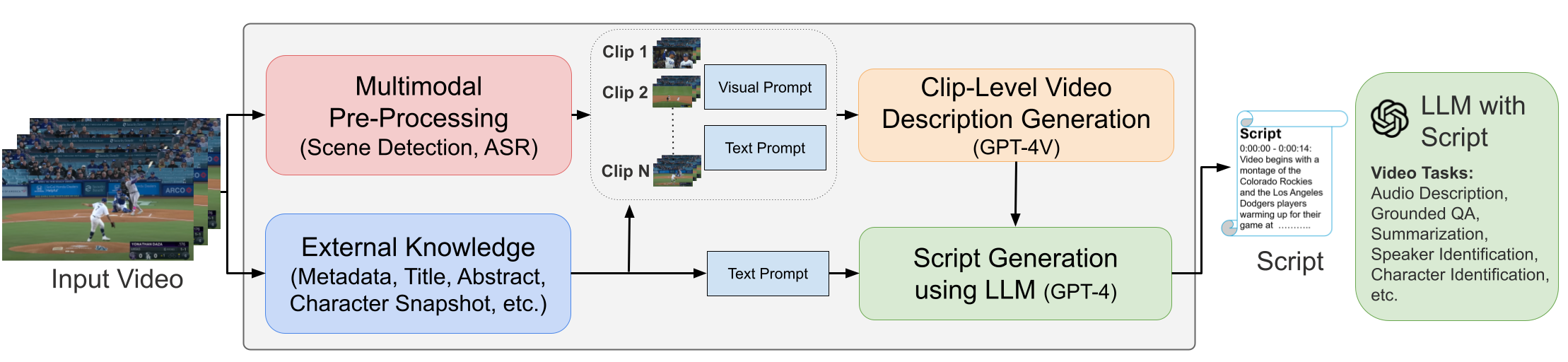

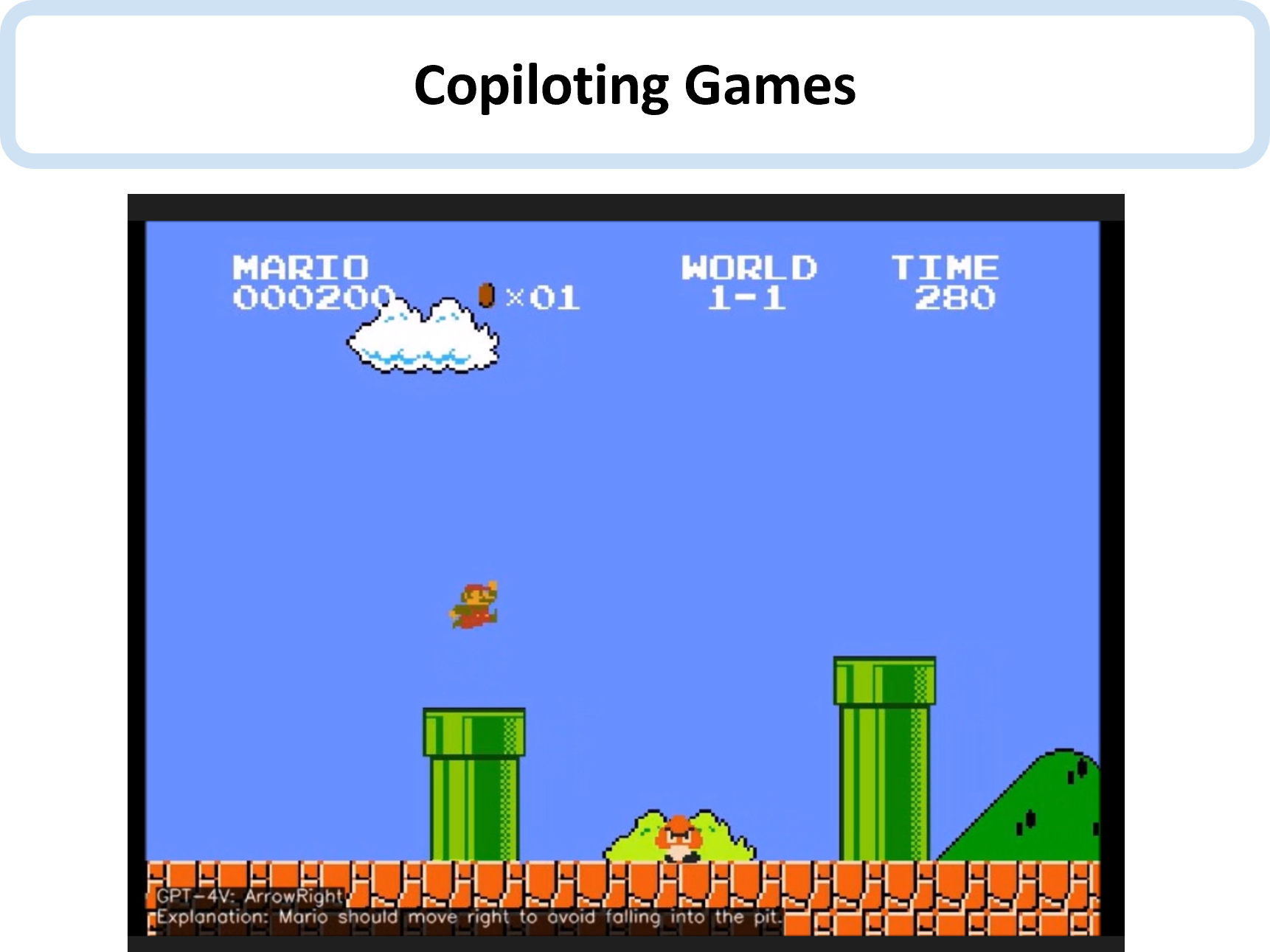

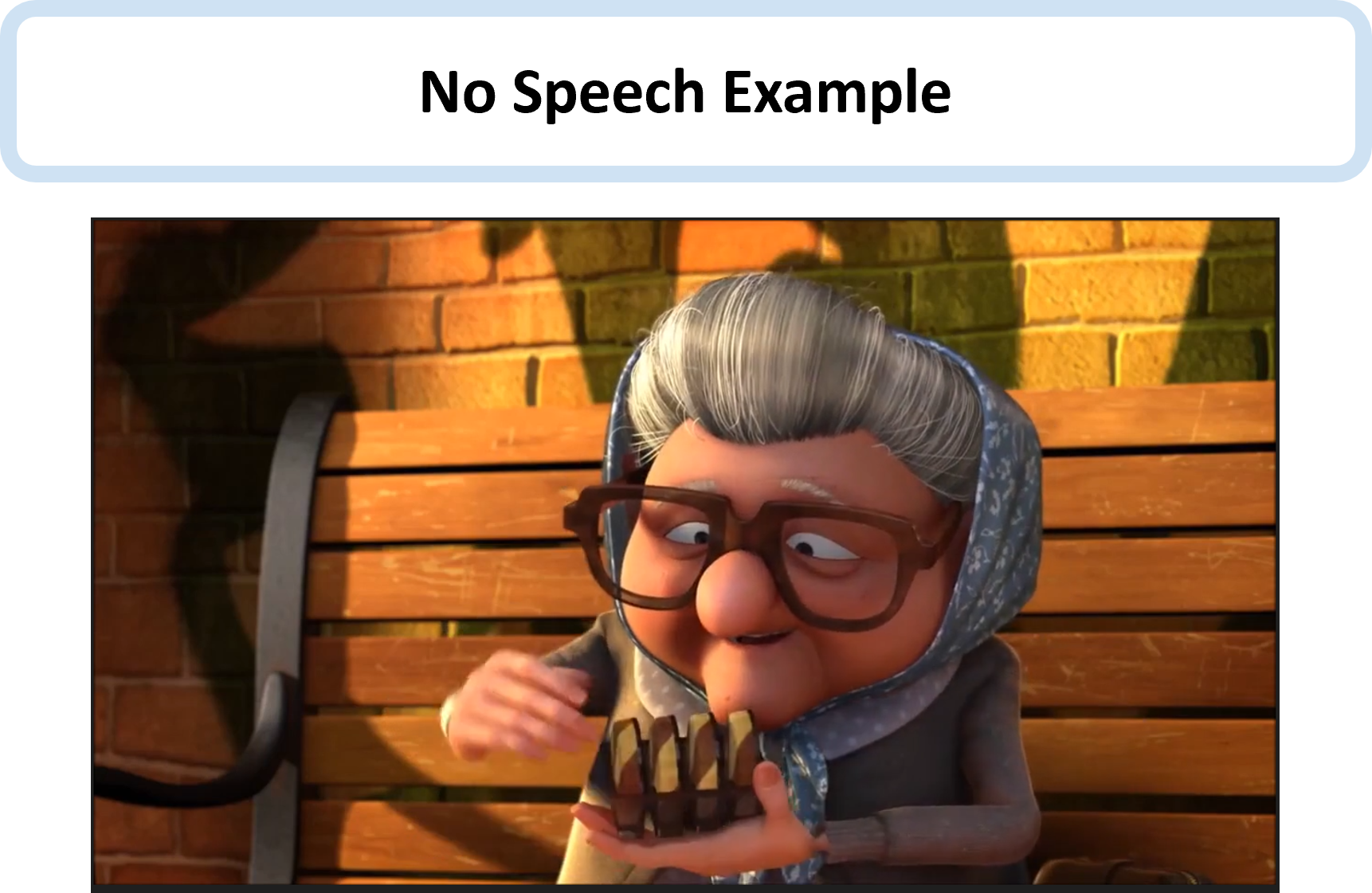

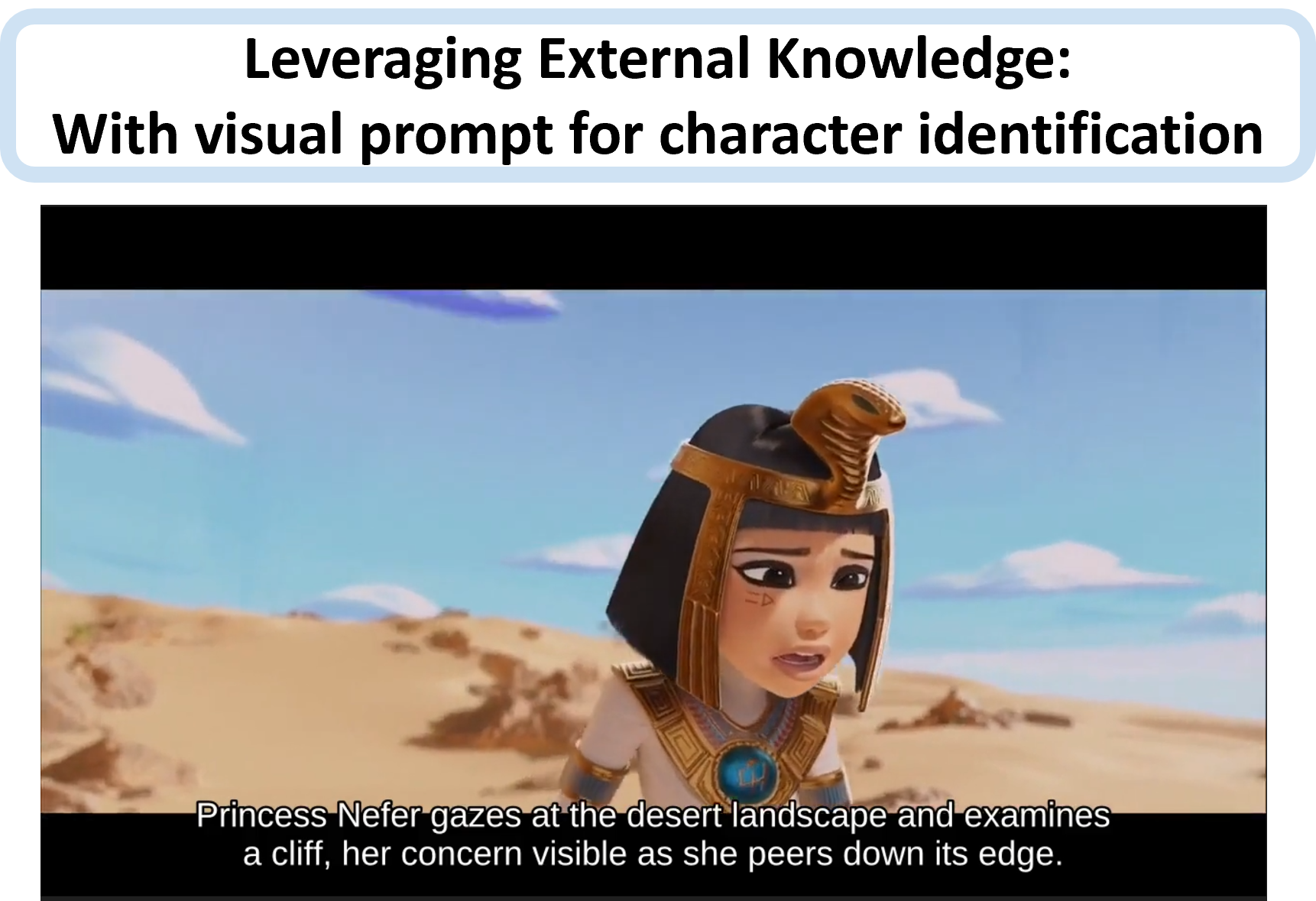

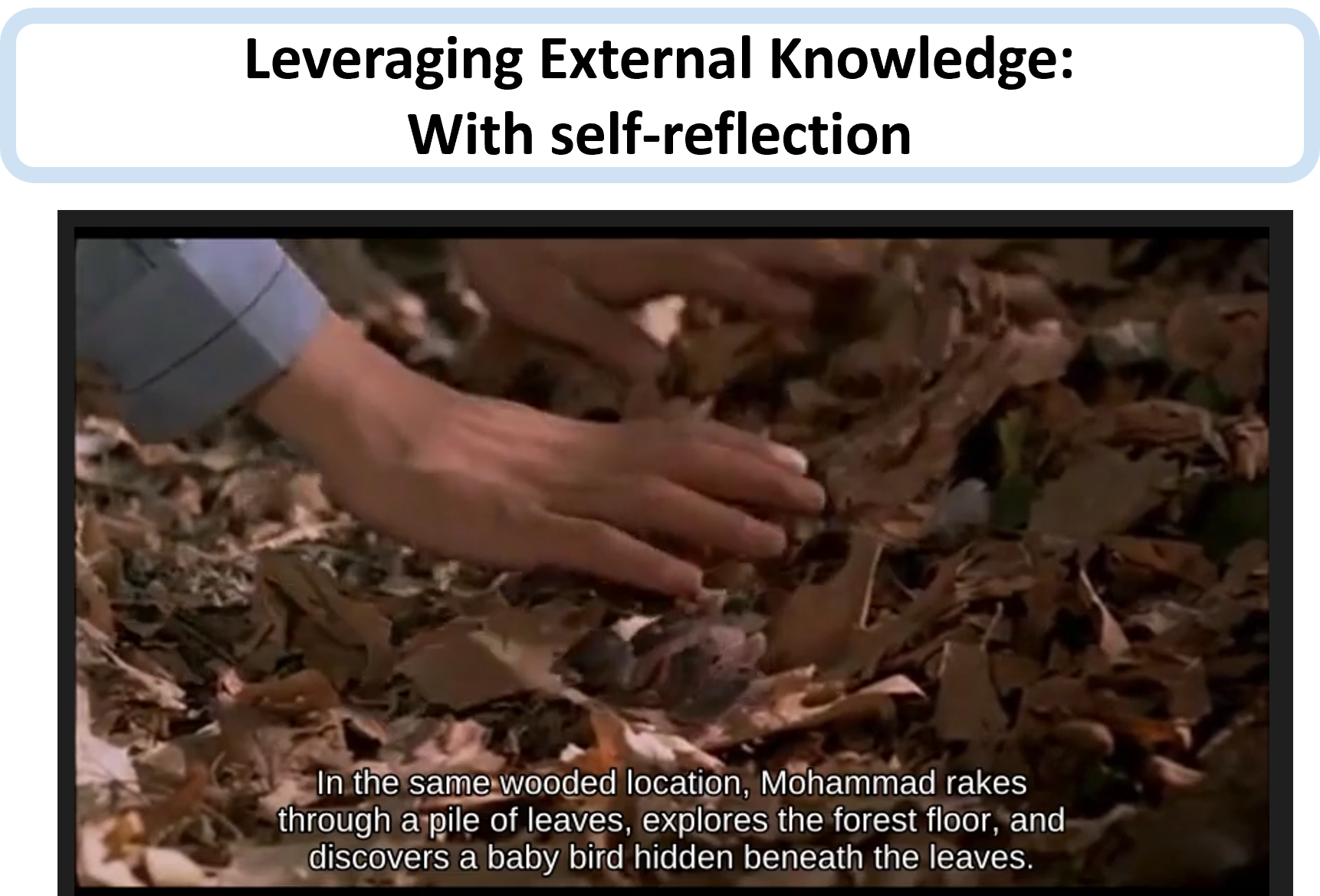

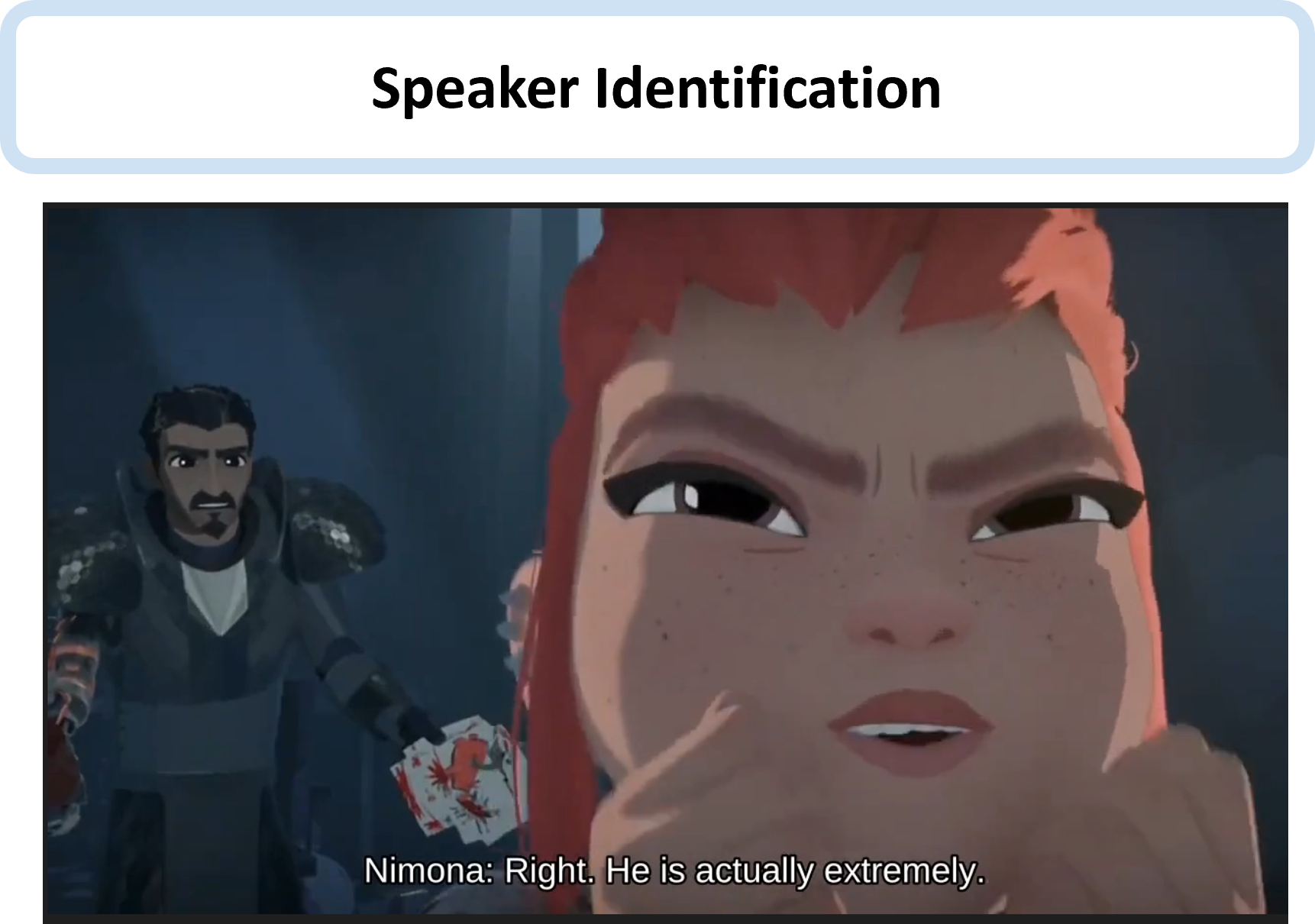

We present MM-VID, an integrated system that harnesses the capabilities of GPT-4V, combined with specialized tools in vision, audio, and speech, to facilitate advanced video understanding. MM-VID is designed to address the challenges posed by long-form videos and intricate tasks such as reasoning within hour-long content and grasping storylines spanning multiple episodes. MM-VID uses a video-to-script generation with GPT-4V to transcribe multimodal elements into a long textual script. The generated script details character movements, actions, expressions, and dialogues, paving the way for large language models (LLM) to achieve video understanding. This enables advanced capabilities, including audio description,character identification, and multimodal high-level comprehension. Experimental results demonstrate the effectiveness of MM-VID in handling distinct video genres with various video lengths. Additionally, we showcase its potential when applying to the interactive environments, such as video game and graphic user interface.

@article{2023mmvid,

author = {Kevin Lin, Faisal Ahmed, Linjie Li, Chung-Ching Lin, Ehsan Azarnasab, Zhengyuan Yang, Jianfeng Wang, Lin Liang, Zicheng Liu, Yumao Lu, Ce Liu, Lijuan Wang},

title = {MM-Vid: Advancing Video Understanding with GPT-4V(ision)},

publisher = {arXiv preprint arXiv:2310.19773},

year = {2023},

}

We are deeply grateful to OpenAI for providing access to their exceptional tool. We are profoundly thankful to Misha Bilenko for his invaluable guidance and support. We also extend heartfelt thanks to our Microsoft colleagues for their insights, with special acknowledgment to Cenyu Zhang, Saqib Shaikh, Ailsa Leen, Jeremy Curry, Crystal Jones, Roberto Perez, Ryan Shugart, Anne Taylor for their constructive feedback.

This website is adapted from Nerfies, licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.